Building a ComfyUI pipeline

It’s no wonder tools like ComfyUI are gaining traction. I decided to step into this node-based environment, explore its potential,

and understand why so many major players in the Media & Entertainment industry are increasingly adopting AI-driven solutions.

This is a highly configurable, node-based platform where users can generate images or videos using a wide range of vision models and constraint layers.

Combined, these elements enable visual consistency and more predictable outputs.

For this project, I used a multi-pass generation strategy, a concept borrowed from my compositing background. The idea is simple: break the shot into

isolated elements and develop them independently.

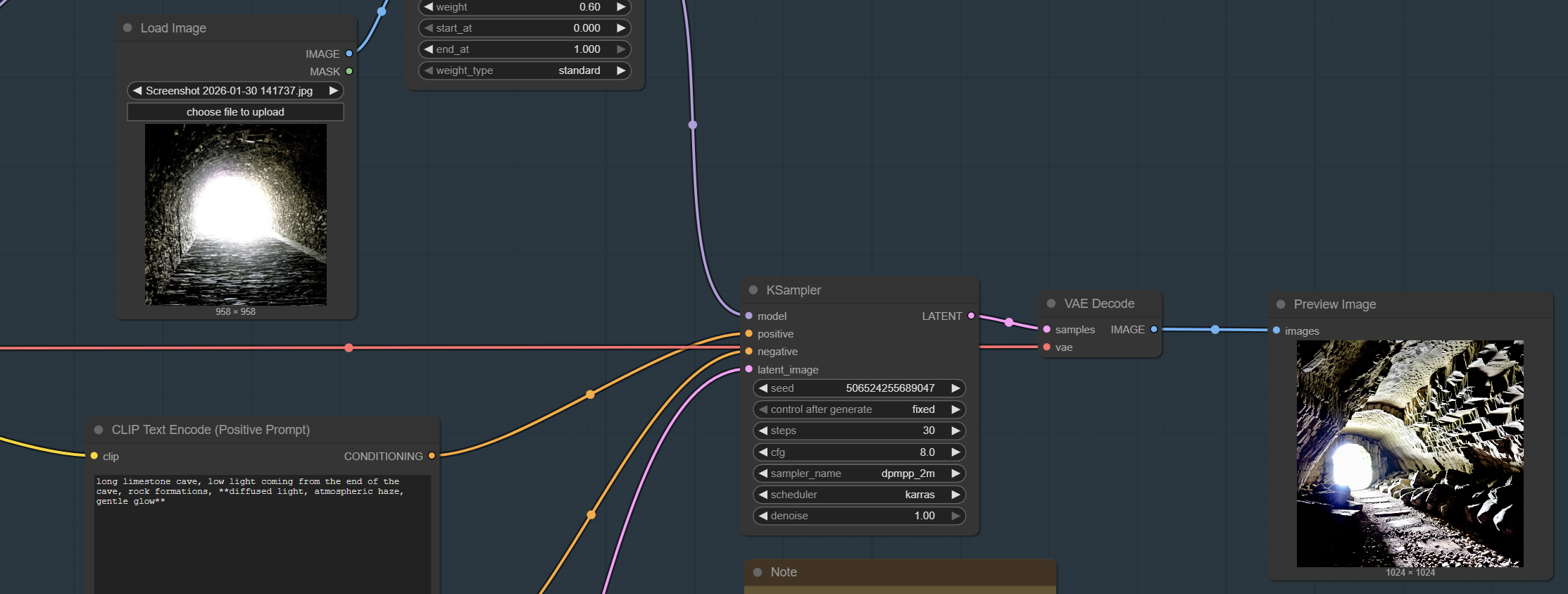

For the first pass, I defined the environment based on an image reference, semantic prompts, and a high noise parameter until I reached an output that aligned with my intent. A key feature here is seed locking after fine-tuning parameters, ensuring the result can always be reproduced. This becomes especially valuable when sharing projects with other decision-makers.

For the first pass, I defined the environment based on an image reference, semantic prompts, and a high noise parameter until I reached an output that aligned with my intent. A key feature here is seed locking after fine-tuning parameters, ensuring the result can always be reproduced. This becomes especially valuable when sharing projects with other decision-makers.

In the second stage, I refined the environment by introducing styled models (LoRAs), IP Adapters, and ControlNet layers. These components worked together to enhance

the base pass visually. Once again, seed locking and prompt refinement were essential to maintain control.

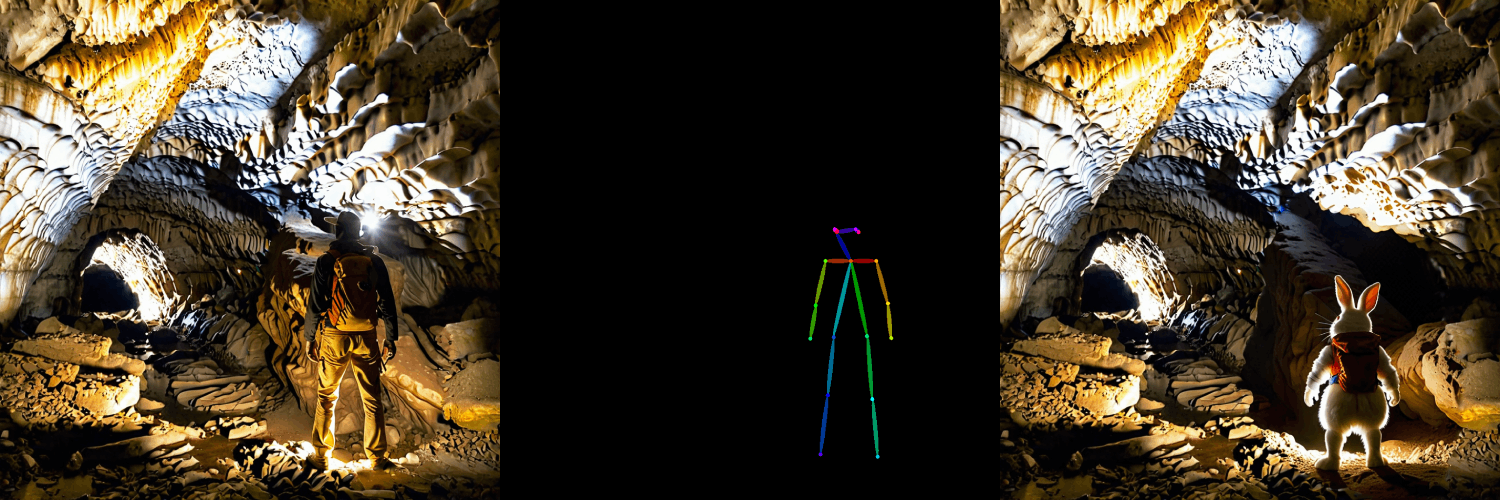

The third stage focused on creating the hero subject: an explorer bunny, seen from behind, staring into the cave with a backpack. The goal was to combine a photorealistic

foundation with subtle stylization. I used a human skeletal rig to define the overall posture while preserving rabbit-specific traits such as fur, long ears, and leg

proportions. Not overly humanized. Not overly stylized. To achieve this, I applied an OpenPose ControlNet and a LoRA trained for photorealistic animals.

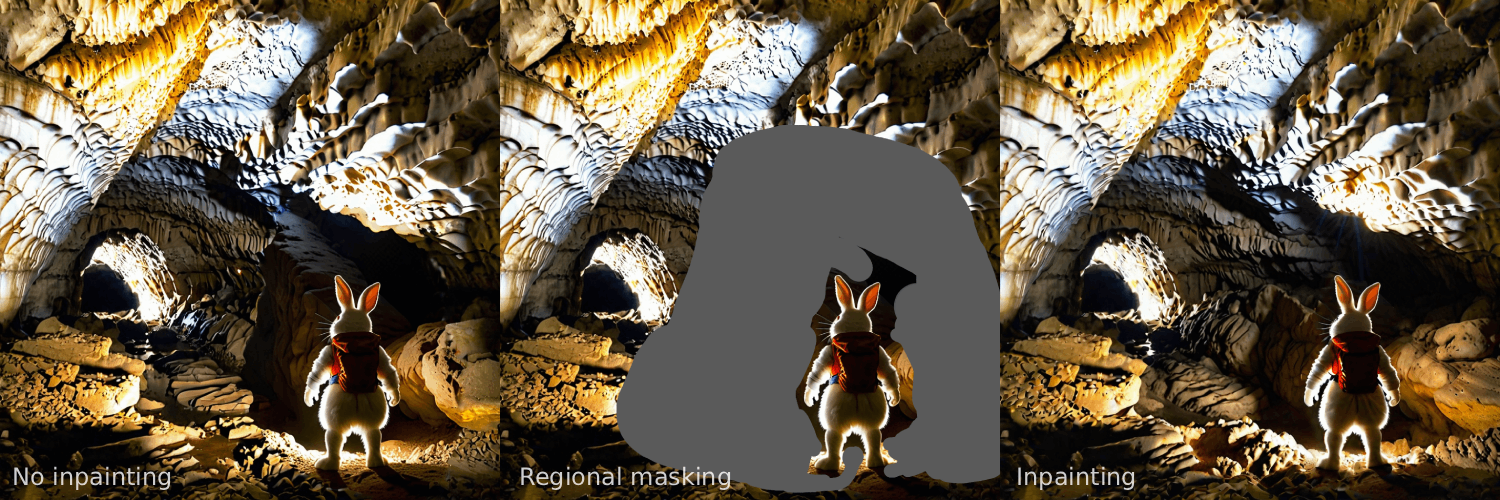

The fourth stage involved fine-tuning the subject through regional masking and inpainting to better integrate environmental elements and lighting conditions. This was the most demanding pass,

requiring careful judgment and manual masking to refine the details.

The fifth pass focused on overall image refinement (img2img). Here, I introduced atmospheric elements such as specular highlights, volumetric god rays, and debris. Secondary LoRAs, prior seed

locking, and selective inpainting ensured that primary features remained intact while layering additional detail. Finally, the image was upscaled once all refinements were complete.

I’ve seen AI-based pipelines used for internal approvals, previs checkpoints, and asset generation including character animation and texture work. With ComfyUI, you can build structured, functional tools that

balance creative control with computational cost. In that sense, it operates much like other DCC applications, with the added advantage of rapid iteration.

Some of the major competitors are positioning themselves as early adopters of Stable Diffusion–based architectures. However, real adoption requires more than experimentation. It demands scalable, modular pipelines, a solid understanding of core AI/ML concepts, and the ability to constrain models effectively so outputs are deterministic rather than one-off, lucky results.

In that context, shareholders and internal teams are building entirely new departments around AI technologies, aiming to create systems that move beyond the initial hype cycle.

Will we get there? (this doesn’t even address critical factors such as IP ownership and legal clarity surrounding many of these tools).

Some of the major competitors are positioning themselves as early adopters of Stable Diffusion–based architectures. However, real adoption requires more than experimentation. It demands scalable, modular pipelines, a solid understanding of core AI/ML concepts, and the ability to constrain models effectively so outputs are deterministic rather than one-off, lucky results.

In that context, shareholders and internal teams are building entirely new departments around AI technologies, aiming to create systems that move beyond the initial hype cycle.

Will we get there? (this doesn’t even address critical factors such as IP ownership and legal clarity surrounding many of these tools).